ResearchXL conversion optimization framework (course notes)

02/10/2020

If there's one way to procrastinate that I like more than watching video courses, it's watching good video courses. I know CXL blog as one of the best destinations for high-quality professional marketing content, so I thought I'd give one of their video courses a go.

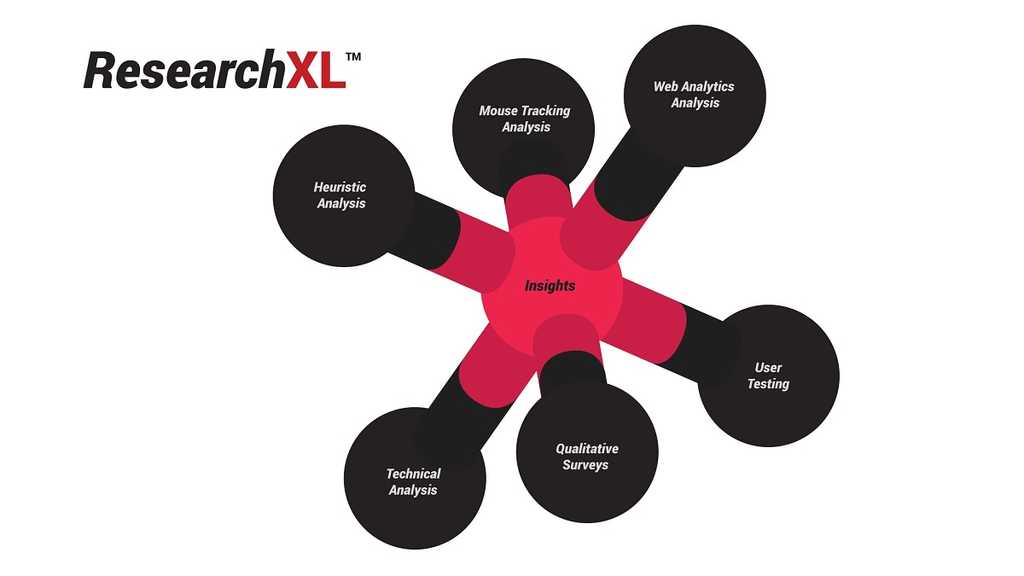

Which I did, and below are my notes from watching this short conversion optimization course from CXL Institute. The course discusses a framework called ResearchXL that guides their conversion optimization services. It's a 6-step process that includes technical and heuristic analysis, digital analytics, mouse tracking, qualitative research, and user testing.

Technical analysis

Technical analysis is the lowest hanging fruit. If a website isn't working on a conversion path, nothing else helps.

- Device and browser analysis. In Google Analytics, compare conversion rates between different browsers and devices. Take into account that mobile can convert much less than desktop, especially for expensive products (no such difference with cheaper products or regular transactions). A broad average is that mobile converts 25% less than desktop. Tablets usually convert the same as desktop. Pick underperforming browser segments and device segments.

- Page speed analysis. Open the site speed report in Google Analytics and select "per page". Sometimes, page load time isn't important (for longer pages). What is important is that above-the-fold content loads within 3 seconds and engages your audience while a page completes loading.

Heuristic analysis

It's an experience-based assessment of a website. The outcome is not necessarily optimal, but it can be achieved quickly. You collect a group of up to 6 people (possibly including actual potential users), and go through their website experience, desktop and mobile separately. Feedback is given in one of four categories:

- Friction. What on a given page is causing doubts, hesitations and uncertainties? What makes the user experience difficult? How can we simplify? Note things that are difficult to understand ("what am I supposed to do next?"), difficult to do (20-field forms), things that undermine trust (blinking banners like we're still in 2000) etc.

- Distraction. What's on the page that is not helping the user take action? Is anything unnecessarily drawing attention? If it's not motivation, it's friction — and thus it might be a good idea to get rid of it. Every page should have a clear goal, and we're looking for everything that does not help a visitor take one desired action. We're looking for conflicting call to actions, too.

- Motivation. What is it about your product or service that makes users want to engage? What are you doing to incentivize the user to take action? Understand why people need your product, what problems it solves, and reflect it back. When your target group feels understood, magic happens. Also, motivation balances friction: you can probably handle a 20-field form if you're given something of value in return. Is a visitor kept motivated enough to reach the goal?

- Relevance. Does the page meet user expectations, both in terms of content and design? How can it match what they want even more? Is the page actually relevant to the target user? Relevance and clarity boost conversions, while anxiety and distraction kill it. Urgency is what propels people to take action right away.

This kind of structured evaluation should be done for every website page that matters, on desktop and mobile separately, and written down — this defines areas of interest. 50% of the observations written down will probably turn out irrelevant. In the following stages of conversion research, these observations will be validated or invalidated through qualitative and quantitative research.

Digital analytics

Take a close look at data collected by Google Analytics or whatever similar tool you're using. Questions to ask yourself:

- Are things that matter measured? This includes every action that users can take on your site, and everything that contributes to your KPIs. How far are people scrolling? Do they interact with a slider at the top of a page? Are they watching an overview video, and if so, for how long? Are they searching? Are they interacting with filters, and if so, which ones? Are they clicking "Add to cart"? Are all funnel steps tracked? If things aren't measured, we can't improve them. Google Tag Manager is the easiest way to set up all these events. Heap just tracks everything out of the box, but becomes expensive quickly.

- Is data accurate? Conduct health checks. Unnaturally low bounce rates, double-counted revenue, subdomain tracking not configured properly are usual suspects.

- Where are the leaks? Identify specific pages and steps where money is lost. Check funnel performance. (50%: average percentage of people who proceed from cart to checkout in e-commerce applications.) Identify high-traffic and high-bounce or high exit rage pages. Check user flows, new vs returning numbers. All this should be done for mobile and desktop separately.

- What is the correlation between user behavior and your goals for the user? Looking at people who buy something, what other behaviors correlate with high purchase rates?

- What kinds of segmentation make sense for the website we're conducting research on? Find them.

Mouse tracking

Here's a set of tools that you can use to get insights from cursor tracking in user sessions:

- Scroll maps (heat maps showing what content is scrolled to). Is important content positioned too low where few people are scrolling to? Where is the transition between hot and cold areas located? Should page design be corrected in order to facilitate further scrolling? (Sharp contrast between background color of adjacent elements is known to provoke this.)

- Click maps. In addition to its most direct use, these show what people are trying to click on that is not a link.

- Hover maps (where a visitor's cursor is). This is scam: people don't always look where their cursor is, so accuracy of hover mapping is always questionable.

- Session replay. This is especially valuable to investigate what people are doing (or not doing) on pages with high drop-off rates.

Qualitative research

Qualitative research reveals the "why" behind user behavior. This is the most insightful part: we're talking to our actual users, peeking inside the mind of people we're trying to sell to. Without knowing what they're thinking, we're in the dark.

- Customer surveys. Surveys are best sent to people who have just purchased something or just signed up. You want to survey recent first-time buyers with no past purchase history, and no more than 30 days since their purchase (5 days since purchase is better). Send the survey via e-mail and ask open-ended questions, because you don't really know what the possible answers are. Don't waste time on useless questions like "how would you rate our service?": this isn't why you've reached out to them. Things to understand:

- Points of friction.

- One thing that nearly stopped them from buying.

- What's their intent: what's the specific problem they're trying to solve.

- How did you compare to competitors? What was the customer's shopping process? How many comparisons did they do? What mattered most to them?

- Onsite polls among visitors (who might or might not buy). 2 ways:

- Exit surveys on high drop-off pages close to the money (such as shopping cart or checkout). Hit them with a pop-up when they're about to leave. Target users with engagement above average (such as those who spent over 20 seconds on a page or similar). Ask one open-ended question, such as "what's holding you back from completing a purchase/getting the product/whatever-goal-there-is right now?" You can preface this with a close-ended question to improve response rate: "Is there anything that prevents you from doing-that-thing? Yes/No; if Yes, let us know what exactly". The insights you're mining here can validate or invalidate observations from heuristic analysis.

- Surveys on entering a specific page.

- Interviews. This is the absolute best way to get insights from real customers. Phone interviews and faster and easier to set up. Moderation skills are important. It's also useful to interview customer service staff for top questions, specifically pre-purchase questions and technical complaints. Interviewing sales people when CTA is to call helps understand what answers to pre-purchase questions usually work well.

- Live chats: read through transcripts, analyze what's being asked.

User testing

Observe actual people (recruited to represent your target audience) interacting with your website while they're explaining the thought process out loud, and see what kinds of friction they encounter. Types of user testing:

- Remote unmoderated user testing. The tester performs a test via an online service on their own based on a prepared instruction list, without outside interference. Use TryMyUI, UserTesting or similar tools where you specify the number of people you want, expected demographics, provide website URL and the list of tasks to do. This is fast, cheap, but the least valuable of all user testing options.

- Remote moderated user testing. This can be a video call (via Skype, Zoom etc.) with a tester when you talk to them, give instructions and ask questions. Requires good moderation skills. Recruit from Facebook groups, Craigslist etc. on a paid basis: 25-100 USD depending on time spent, as cash or value like gift certificates.

- In-person moderated user testing. You sit with a tester (preferably in their environment) and have them work through a task while you're observing and asking questions. Requires good moderation skills, most time-consuming, and most expensive method.

Test scripts can be based on real-world common flows (revealed by analytics) or a user-specific story (who they are, what they're after). Break a script into small steps to observe actions one at a time. Two approaches are possible:

- Make the script as specific as possible (in terms of goals, not instructions).

- Make it intentionally broad, such as "find and buy a present for your friend's birthday".

As an extra, add an impression test ("five-second test") at the very beginning to gauge first impressions, test brand imagery, communication, and home page effectiveness.

In most cases 5 to 10 people for user testing is enough; 15 people max, then the law of diminishing returns kicks in.

Conduct user testing every time before rolling out a major change (on a staging server), or at least once a year. Fix obvious problems and test everything again.

A/B/n testing

Once the ResearchXL process is completed, the outcome is the list of problems that we're aware of.

Now, have a cross-functional team hypothesize as many solutions to the problems as possible. Variety of suggested solutions matters, as sometimes the idea that nobody believes in wins. "The better our hypothesis, the higher the chances that our treatment will work, and result in an uplift."

Based on your traffic, decide how many variations you want to test at once (could be A/B/C/D/n testing).

The statistics behind A/B/n testing is very complicated, there's a lot of room to explore. Below are just the basics. The important thing is to know when a test is done or cannot be done. There are stopping rules:

- Sample size and statistical significance. How large a sample do you need to consider results valid? The less the difference between performance of variations, the more sample size we need to validate it. There are A/B test sample size calculators, such as CXL's own calculator. If the calculator says you need 100,000 sessions to run a test, and you get 50,000 sessions a month, then you can't run a statistically significant test.

- Business cycle. To avoid outliers, it's better to run any test for at least two business cycles (which is 2 weeks for most businesses), even if you have reached the target sample size sooner.

Tests can be, by increasing level of sophistication:

- A/B split tests (one element against another),

- Multivariate tests (testing several elements at a time),

- Experimental research methods.

Most test building tools have a visual editor but they're very limited and shouldn't be used. For most tests, it's best to invite a frontend developer to build a test, because it's essentially about manipulating front-end code. There are also dedicated companies that can build tests for you.

Quality assurance for coded tests is very important because broken variations is the number one result killer. Every test should go through a QA process, ideally with a dedicated QA person.

There are 3 possible results of testing:

- Flat results (there's no difference between results). That's fine, go test something else.

- Treatment performed worse than the control: dismiss the treatment.

- Treatment performed better than the control: implement the winner.

In the first two cases, it makes sense to play with results and look at them from different perspectives: traffic sources, new vs returning, mobile vs desktop (if the test was combined), etc.